Language models feel confident by default: they print tokens, we read sentences. But under the hood, every token is a probability mass decision. Certain makes that probability visible in a compact chat UI so you can literally see where the model is certain and where it hesitates.

→ Try Certain here

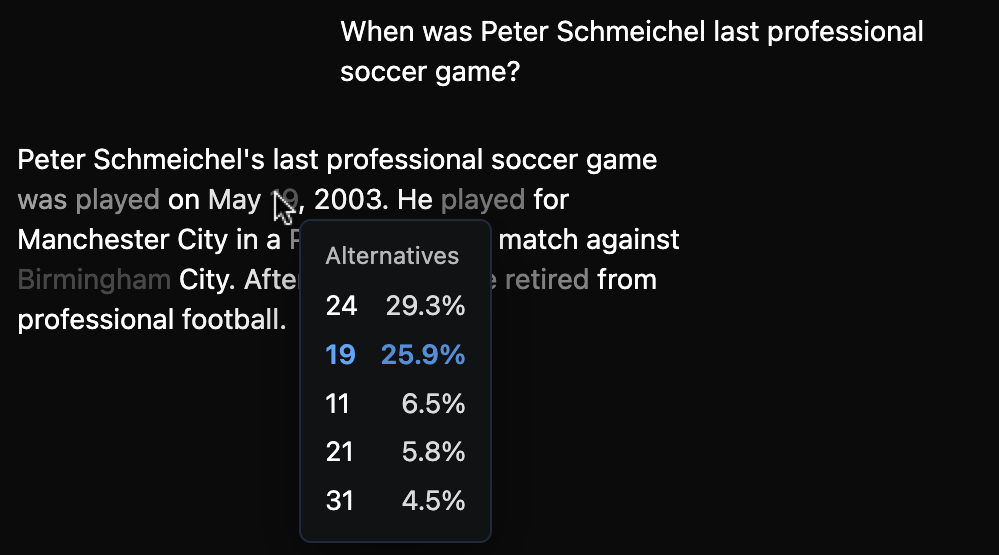

The core idea is simple: we request token-level logprobs from the OpenAI Chat Completions API and render each token with an opacity mapped to uncertainty. You can choose between two views: Prob. (opacity ∝ selected-token probability) and Gini (opacity ∝ inverse Gini over the top‑5 alternatives). Hold Alt to toggle the visualization on/off while reading. Hover any token to see a small tooltip with its best alternatives and their probabilities. In the left margin we also display the geometric mean over the response so you get a quick, single-number sense of confidence.

Access is handled via a tiny modal. Click the “API Key” button in the

header to paste your key; it’s stored only in your browser’s

localStorage. No key? Click Demo to load an example

conversation so you can still feel the UI. I also added a model

selector with the main OpenAI models that support token probabilities

(e.g. gpt-4o, gpt-4o-mini,

gpt-4.1, gpt-4.1-mini). Everything persists to

local storage so your preferences stick between visits. Note that popular

reasoning models like GPT-5 do not support token probabilities.

Under the hood, token processing is straightforward: from each token’s

logprob we compute p = exp(logprob) and get the

top‑k alternatives (their probabilities power the tooltip). For the

Gini-style view, we normalize the top‑5 probabilities and use the

squared L2 norm as an inverse Gini proxy—high when one token dominates,

low when the distribution is flat. We also stabilize opacity across

certain boundaries (punctuation, quotes) to avoid distracting flicker.

Why build this? Two reasons. First, trust: seeing uncertainty helps you calibrate when to double‑check. Second, exploration: it’s delightful to hover tokens and peek at the paths the model almost took. It turns text generation from a black box into a legible, probabilistic process.