I’ve been using structured generation a lot lately, and while it's all good that Gemini and OpenAI now support it, I find that it's not as robust as I want it to be, and that comparison between providers require too complex setups. I'm a huge fan of Outlines, but it's not reliable for frontier models through public APIs like OpenAI who don't expose logprobs.

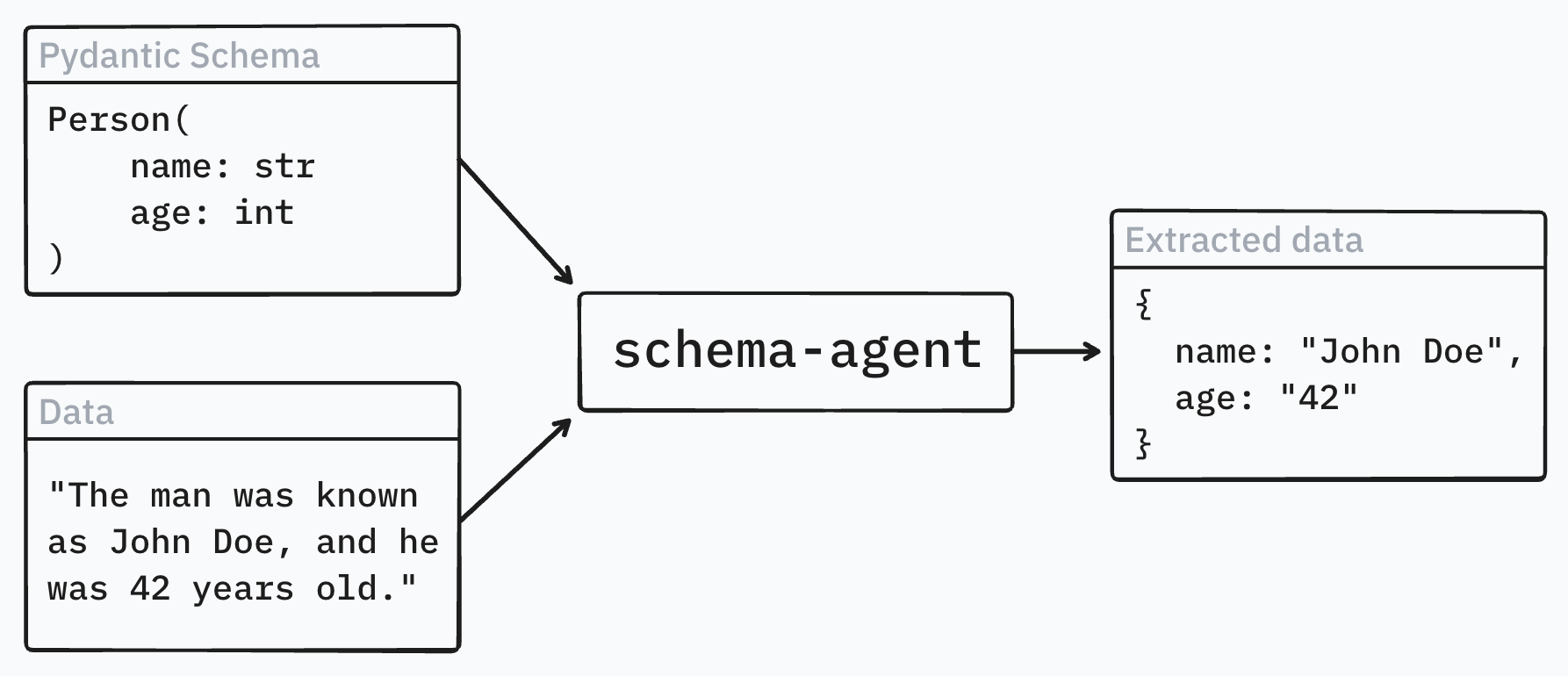

So I built schema_agent: a tiny, practical helper that wraps a model with a

validation tool and retries on failure until the output matches your Pydantic schema.

It’s provider-agnostic: pass a LangChain-compatible model or just a string like

"openai:gpt-4o-mini", and you get back a validated BaseModel instance

plus the raw agent trace for debugging.

How it feels to use

from pydantic import BaseModel, Field

from schema_agent import generate_with_schema

class Person(BaseModel):

name: str = Field(description="Full name")

age: int = Field(description="Age in years")

resp = generate_with_schema(

user_prompt="His name was John Doe and he was 42 years old",

llm="openai:gpt-4o-mini", # or a LangChain model instance

schema=Person,

max_retries=2,

)

# Validated Pydantic instance

print(resp["reply"]) # -> Person(name='John Doe', age=42)

print(resp["success"]) # -> True

print(resp["retries"]) # -> 0 or 1 depending on how clean the first answer was

That’s it. Under the hood, the agent always calls a validate tool first. If the tool raises a schema or JSON error, the agent gets a clear retry signal and tries again. Unexpected tool errors are surfaced immediately so you can fix real issues instead of guessing.

Why I like this approach

- Schema-first: say what you want, get exactly that

- Minimal API surface: a single function, predictable behavior

- Robust across providers: no reliance on special “json modes”

- Typed outputs: you keep your

BaseModel, not a dictionary

If you want to try it, it’s on PyPI:

schema_agent.

Code is here: GitHub.

There’s a short demo script in scripts/demo.py.

It’s small, pragmatic, and made to be dropped into real workflows. If it breaks for your use case, I’d love to hear why.